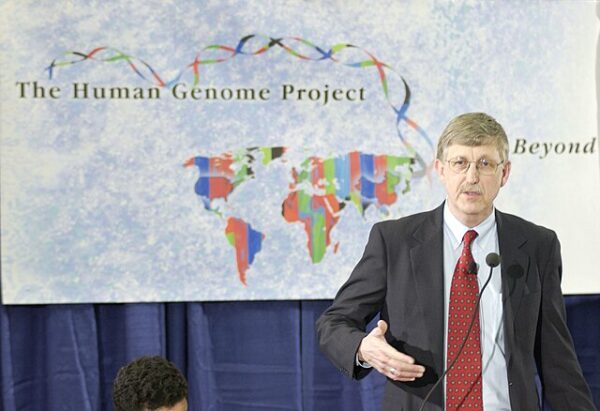

It was a moment suspended between science and scripture: on June 26, 2000, mankind read the first legible draft of its own instruction manual. In simultaneous announcements from Washington, D.C. and London, leaders of the public Human Genome Project and its private-sector competitor, Celera Genomics, stood side by side to declare that they had sequenced roughly 90% of the human genome—a composite code comprising over 3 billion base pairs. Though incomplete and riddled with gaps, the “rough draft” marked the most ambitious cartographic effort since humans first traced coastlines: a biological map not of terrain, but of the body itself.

What had begun in 1990 as a federally funded moonshot into the invisible—under the joint auspices of the National Institutes of Health and the Department of Energy—culminated in a shared victory ten years ahead of schedule. That urgency was driven, in no small part, by the entrance of Celera Genomics, led by the iconoclastic biologist Craig Venter, whose rapid-fire sequencing methods challenged the methodical pace of the public consortium. For a time, the contest appeared to pit commercial secrecy against academic openness. Yet under mounting pressure and with statesmanship unusual for the world of science, a truce was reached. That détente, publicly consecrated by President Bill Clinton and Prime Minister Tony Blair, allowed both sides to claim success—and preserved the foundational principle that the human genome belongs to no one and to everyone.

In a tone half celebratory, half solemn, President Clinton told the world, “Today, we are learning the language in which God created life.” It was a flourish befitting the occasion. For the genome draft was more than a scientific artifact—it was a mirror held up to mankind. Every trait, every inheritance, every predisposition to disease or resilience, every ancient migration pattern or evolutionary dead-end—written not in ink or stone, but in the chemical alphabet of adenine, cytosine, guanine, and thymine.

Yet the code alone does not reveal its meaning. As the scientists themselves acknowledged, they had sequenced the letters but not yet deciphered the syntax. The genome, far from offering simple answers, posed new and deeper questions: Which genes matter most? How do they interact? What, in the end, is the boundary between nature and nurture—between inherited fate and individual will?

Nevertheless, the practical promise was immediate and electrifying. With this knowledge, researchers could begin to pinpoint the genetic mutations behind cancer, Alzheimer’s, cystic fibrosis, and other heritable diseases. Pharmaceutical development, long plagued by trial and error, might now proceed with precision. In time, doctors could prescribe not just pills but molecular interventions, tailored to each patient’s genetic profile.

But as with all revolutions, the genomic era arrived freighted with peril as well as promise. If a person’s future health could be predicted from birth, what then of privacy? Could insurers or employers demand to see the results? Might parents select embryos based on preferred traits? And could the line between healing and enhancement—between medicine and eugenics—be maintained in a world where genes could be edited as easily as text?

These questions, once the domain of speculative fiction, had become urgent policy dilemmas. And they remain with us still. But the act that forced them into the open—that first reading of the genome—was irreversible.

The final “clean” version of the genome would not arrive until 2003, coinciding, with poetic symmetry, with the 50th anniversary of Watson and Crick’s double helix. But it was the 2000 announcement that breached the wall. In that moment, humanity ceased to be merely the subject of biology and became its reader.